The most committed atheists I know are people who grew up religious. The most devout people I know went through a Strong Atheism phase. There are various theories here, most of which are folk psychology — disgust, attention-seeking, daddy issues, etc. — but the best one I’ve heard (from an evangelical turned angry atheist) was that some people take ideas seriously, and moderation is morally indefensible.

This is true! A fourteen-year-old who just read Foucault or Peter Singer or Ayn Rand can absolutely trounce mom and dad in a fair debate, because the newly-enlightened teenager is reasoning straight from a narrow set of sensible premises. This tells you something important about philosophy and hypocrisy: it’s easy to be morally consistent if you don’t have bills to pay.

In my social circle, I can think of communists, anarcho-capitalists; people who won’t eat animal products, people who will only eat meat; proponents of radical honesty, egoist sociopaths; people who want their bodies frozen when they die, people who want to be put in a Hefty bag and left on the curb when they die — and people who have kids. You’d think that having kids would clarify some philosophical issues; if you bring a human being into existence, you might start to ask “Why are we here? What are humans for? ” But kids present a fixed cost, in time and attention, and suddenly a lot of cosmic issues become less pressing than diaper logistics.

This applies to other domains, as well. I read a book on asset allocation a while ago that went something like this:

- Here is a simple model of how to split up your money between different kinds of assets based on their historical returns, volatility, and correlation. This model is theoretically sound, and derived from a few basic principles that are impossible for any reasonable person to disagree with.

- Now we’re going to test it with historical data! Ah, as it turns out you should put about 85% of your money into emerging-market small-cap stocks, and the rest into T-Bills.

- Were not going to do that. Instead, let’s do a bunch of less theoretically well-grounded stuff that gets us closer to conventional wisdom. We’ll probably end up just starting with conventional wisdom and tilting it a little bit in the direction theory implies.

This also happens when you look at individual stocks. A few years ago I read a great interview with a hedge fund manager who used the Kelly Criterion to size trades. If you make some simple assumptions about your betting edge and your bankroll size, the Kelly Criterion tells you how big to size your trades to maximize your long-term return. Conversely, if someone has told you what their price target is for a stock, and how much of their portfolio it represents, you can use the Kelly formula to impute the edge they’re implying they have.

As it turns out, if you do this math, most people implicitly think their edge hovers around 51%. You spend hours slaving over financial models, you talk to management, you do your channel checks, and you ultimately conclude that all that work makes you statistically close to a coin-flipper.

This hedge fund manager was having none of it; he put 93% of his fund into one stock. In the first half of 2008, he was up something over 50%. In the second half, he was down by about two thirds. Then he shut down his fund and opened a bar.

The Meta-Model

There are three broad problems with models:

- Since they don’t capture every variable, they generally don’t reflect reality all that well.

- When they do appear to work it’s due to overfitting, or because everyone is pricing assets with the same model.

- Since most people don’t operate from first principles, the world is not well-designed for people who do.

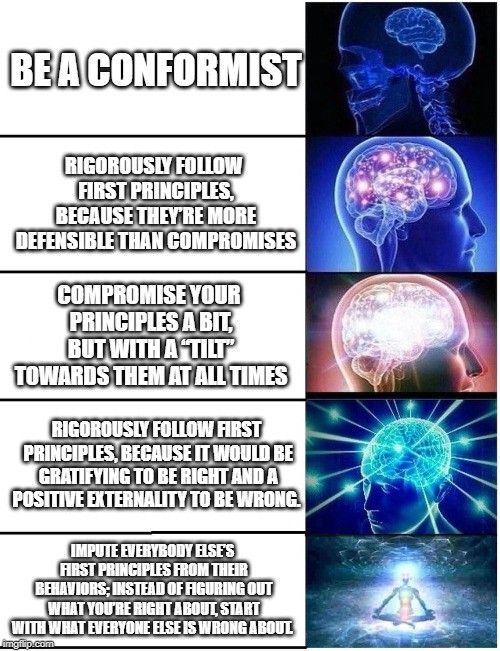

The first two problems are philosophical. The best solution is to embed an expectation of uncertainty: since you know the model is imperfect, good results indicate that you’re not looking at enough data. An abstract model produces a good heuristic, but it doesn’t give you axioms.

The last is a social problem, and the results are domain-specific. One approach is to use a model as a tiebreaker rather than an absolute rule: instead of radical honesty, err on the side of honesty; instead of following every rule in Leviticus, start going to church on Easter and Christmas. This produces nonstop hypocrisy, but that’s okay: if you always live up to your principles, you’ve chosen undemanding principles. It’s not really incremental hypocrisy, just incremental awareness.

But that’s a waste! What if you’ve actually figured out something important, and people whose lifestyles differ from yours are intellectual lazy?

Acting on first principles is a positive externality. A coherent view of the world is either right or wrong; a messy, incoherent view of the world is just meh. If you slavishly follow the consensus view all the time, and your results are disappointing, did you learn that the consensus was wrong, or just that it was overconfident and tricked you into pursuing more competitive domains?

In CAPM terms, living your life based on first principles is an undiversified, high-volatility bet; you should diversify your moral beliefs to have an uncorrelated portfolio of moral acts. Fewer wins, fewer losses. But this underestimates returns and assumes homogeneous preferences. For some people, the fun of being contrarian is not that they’re right, it’s that seven billion people were wrong. To them, the bet might be roughly CAPM-compatible, because going all-in and winning has a high psychic return, going fifty-fifty sacrifices more than half of the psychic income of a moral victory.

This is beneficial for a few odd people, but it’s also beneficial for society as a whole: you take all the risk of your crazy beliefs, but if you’re right, it’s informative; you’ve collapsed the uncertainty around whether some belief is merely right in theory or actually effective in practice.

If the exploding brain approach is to live out first principles to achieve high-risk/high-reward results, the galaxy brain approach is to take the exact converse. Instead of deciding what you believe and living by it, figure out what everyone else implicitly believes, and what opportunities that presents.

Most middle-class Americans at least act as if:

- Exactly four years of higher education is precisely the right level of training for the overwhelming majority of good careers.

- You should spend most of your waking hours most days of the week for the previous twelve+ years preparing for those four years. In your free time, be sure to do the kinds of things guidance counselors think are impressive; we as a society know that these people are the best arbiters of arete.

- Forty hours per week is exactly how long it takes to be reasonably successful in most jobs.

- On the margin, the cost of paying for money management exceeds the cost of adverse selection from not paying for it.

- You will definitely learn important information about someone’s spousal qualifications in years two through five of dating them.

- Human beings need about 50% more square feet per capita than they did a generation or two ago, and you should probably buy rather than rent it.

- Books are very boring, but TV is interesting.

All of these sound kind of dumb when you write them out. Even if they’re arguably true, you’d expect a good argument. You can be a low-risk contrarian by just picking a handful of these, articulating an alternative — either a way to get 80% of the benefit at 20% of the cost, or a way to pay a higher cost to get massively more benefits — and then living it.[1]

The intuition behind first-principles reasoning is sound: there probably are coherent rules you can use to live a better life. Figuring out which rules will actually work is hard; figuring out which ones fail, though, is doable.